|

Shaanxi University of Science and Technology, School of Arts and Sciences 2015.09-2019-06 China Three Gorges University, School of Computer and Information Sciences 2021.09-2024.07 Southern University of Science and Technology 2023.2-2024.3Visiting StudentEngaged in research on multimodality at the Tracking Group, Visual Intelligence & Perception Lab, SUSTech. Focus on multi-modal camouflage object detection. The paper on Depth-aided Camouflaged Object Detection has been accepted by ACM MM 2023. Southern University of Science and Technology 2024.7-presentResearch AssistantI'm interested in camouflage object detection, multi-modal approaches, computer vision, and machine learning.. Note that *contributed equally |

|

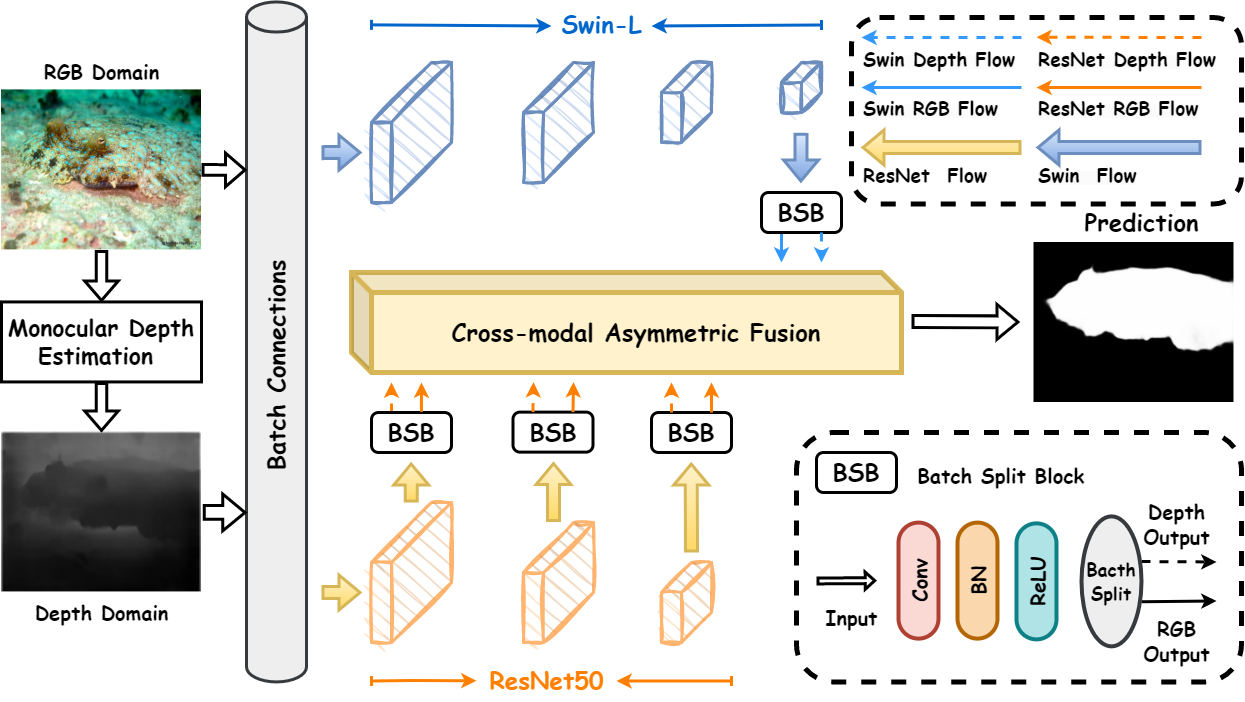

Qingwei Wang*, Jinyu Yang*, Xiaosheng Yu, Fangyi Wang, Peng Chen†, Feng Zheng† ACM MM, 2023 (CCF A) Project page Paper We introduce depth information as an additional cue to help break camouflage. |

|

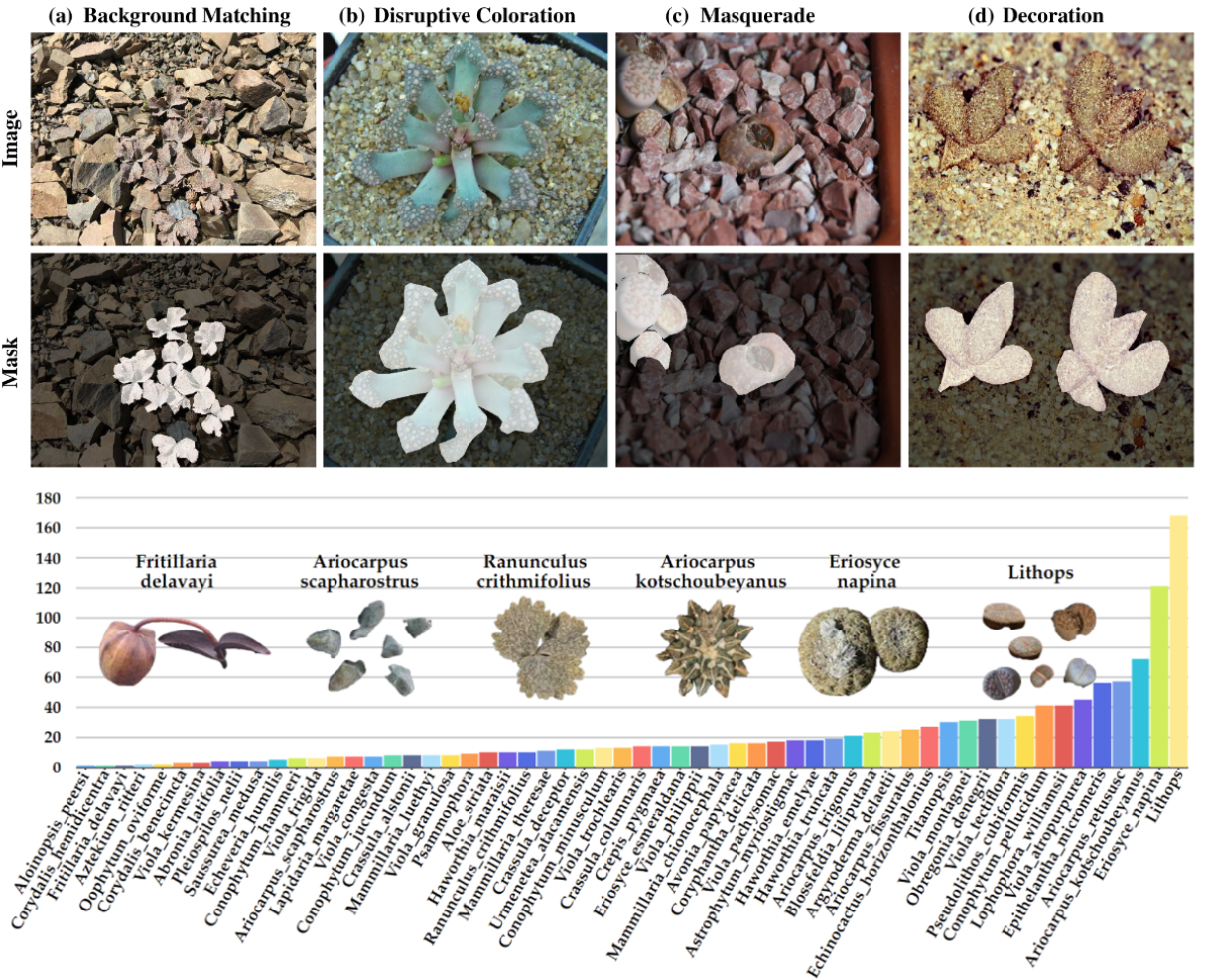

Jinyu Yang*, Qingwei Wang*, Feng Zheng†, Peng Chen, Aleš Leonardis, Deng-Ping Fan arXiv, 2024 Project page Paper In this paper, we present a new perspective on COD by introducing plant camouflage detection and providing the initial effort. Firstly, we collect the first dataset for plant camouflage, named PlantCamo, which contains 1,250 images with 58 kinds of camouflaged plants. Based on the PlantCamo dataset, we conduct benchmark studies to understand plant camouflage detection. Inspired by the plant-specific camouflage characteristics, we further propose a novel PCNet for plant camouflage detection. |

|

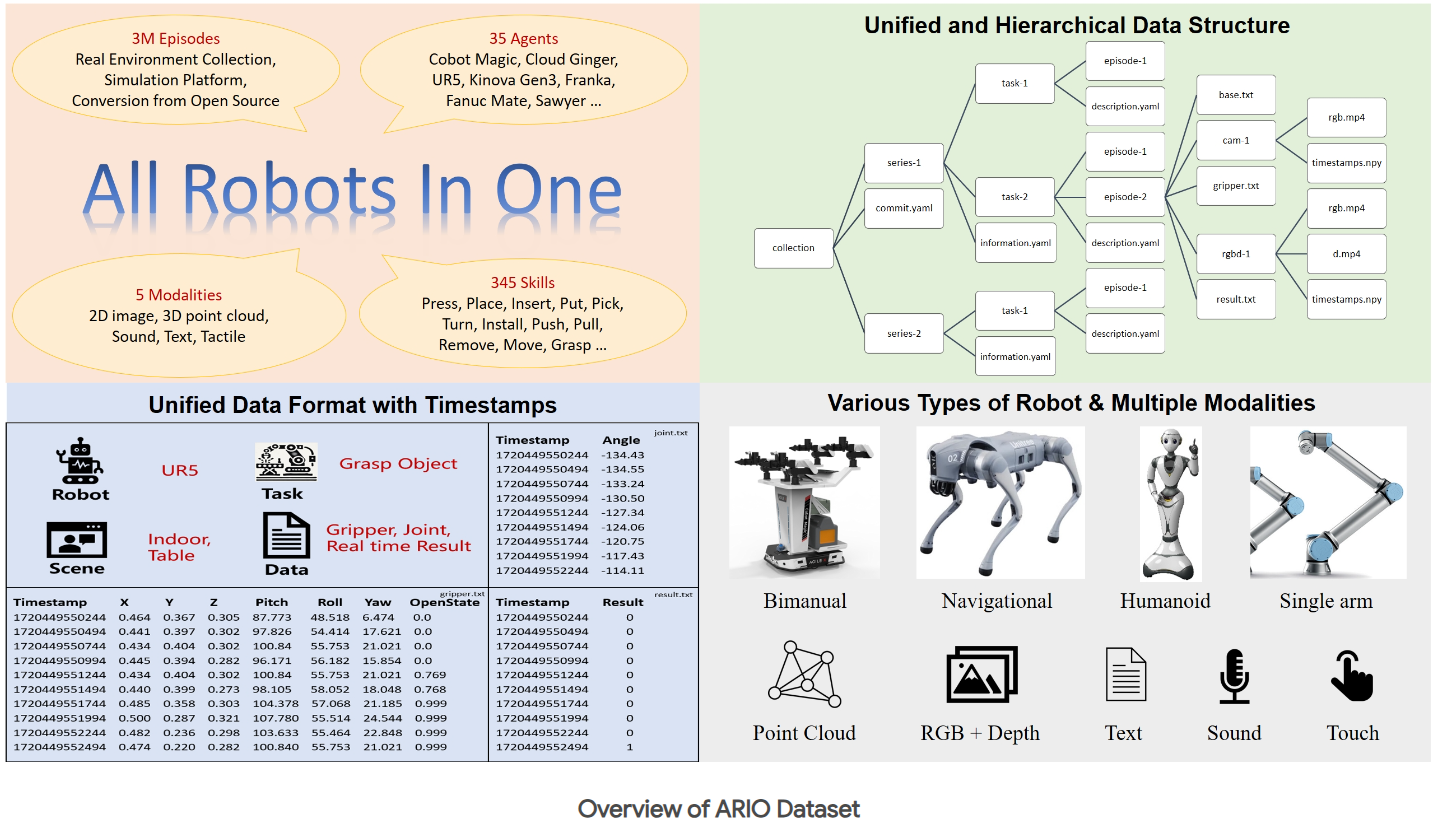

Zhiqiang Wang*, Hao Zheng*, Yunshuang Nie*, Wenjun Xu*, Qingwei Wang*, Hua Ye*, Zhe Li, Kaidong Zhang, Xuewen Cheng, Wanxi Dong, Chang Cai, Liang Lin, Feng Zheng†, Xiaodan Liang† arXiv, 2024 Project page Paper We introduce ARIO (All Robots In One), a new data standard that enhances existing datasets by offering a unified data format, comprehensive sensory modalities, and a combination of real-world and simulated data, we also present a large-scale unified ARIO dataset, comprising approximately 3 million episodes collected from 258 series and 321,064 tasks. |

|

Feel free to steal this website's source code. Thanks to JonBarron. |